Machine learning algorithms are the backbone of data science, transforming the way we analyze data, make predictions, and automate decisions. The field of machine learning continues to grow rapidly, and the algorithms that power this revolution are crucial tools for any data scientist. In this article, we will explore the seven most essential machine learning algorithms that every data scientist should master, along with practical insights and applications.

Understanding Machine Learning

At its core, machine learning is a subset of artificial intelligence (AI) that allows computers to learn from data and improve their performance over time without being explicitly programmed. It relies on algorithms to analyze large datasets, uncover patterns, and make predictions. These algorithms play an instrumental role in predictive modeling, anomaly detection, and natural language processing (NLP). In today’s data-driven world, mastering these techniques is a must for anyone aiming to succeed as a data scientist.

Why Machine Learning Algorithms Matter

Machine learning algorithms enable data scientists to extract meaningful insights from vast amounts of data. This knowledge can be used to solve complex problems in fields ranging from finance to healthcare. The demand for skilled data scientists who understand these algorithms has never been higher. By mastering the right algorithms, you can build models that predict customer behavior, detect fraudulent activities, and automate business processes.

Supervised vs. Unsupervised Learning

Before diving into the seven algorithms, it’s important to distinguish between two primary types of learning in machine learning: supervised and unsupervised learning. In supervised learning, the algorithm learns from labeled data, meaning it knows the input-output pairs in advance. This is essential for tasks like classification and regression. On the other hand, unsupervised learning works with unlabeled data, helping the model identify hidden patterns without prior knowledge of the outputs. Clustering and dimensionality reduction are common applications of unsupervised learning.

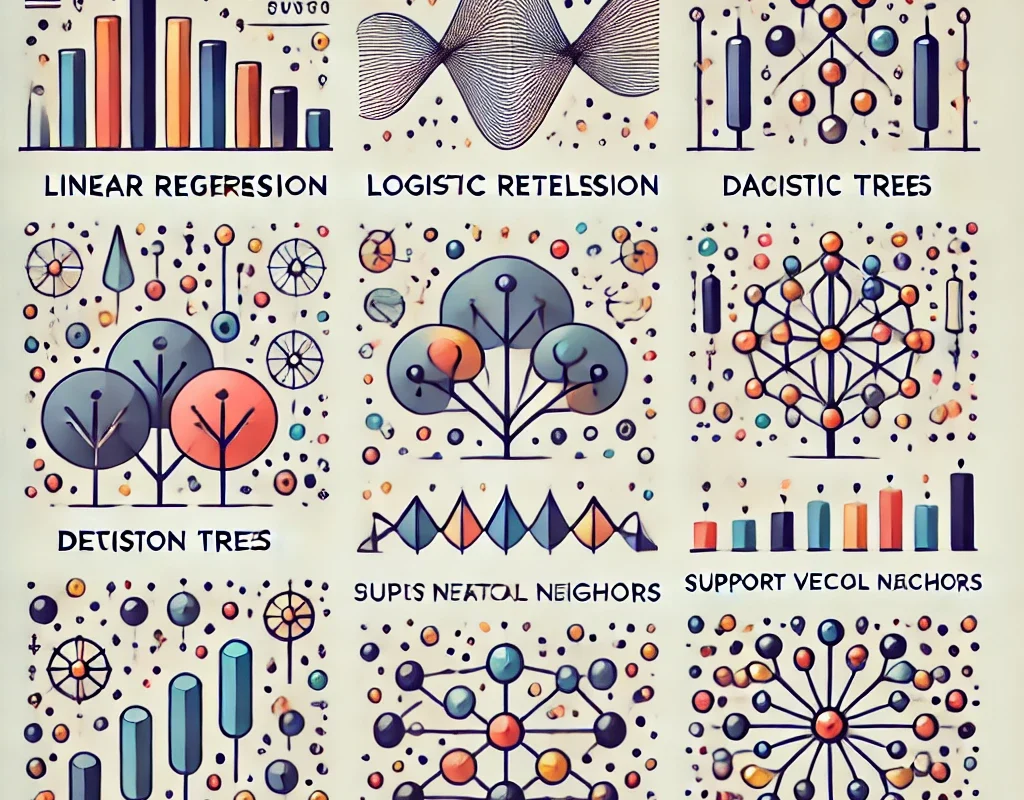

Algorithm #1: Linear Regression

Linear regression is one of the simplest yet most powerful algorithms in machine learning. It models the relationship between a dependent variable and one or more independent variables by fitting a straight line through the data points. Linear regression is widely used in predictive modeling and can help forecast sales, predict housing prices, and estimate growth rates.

Linear regression works best when there is a linear relationship between the input variables and the target variable. Despite its simplicity, it remains a foundational technique in machine learning due to its interpretability and ease of implementation.

Types of Regression in Machine Learning

While linear regression is the most common type, other regression techniques also exist, including polynomial regression and ridge regression. Polynomial regression extends linear regression by fitting a non-linear curve to the data, making it suitable for more complex relationships. Ridge regression, on the other hand, is a form of regularization that helps prevent overfitting in models with many features.

Algorithm #2: Logistic Regression

Unlike linear regression, which is used for continuous data, logistic regression is employed for classification tasks. It predicts the probability of a binary outcome (e.g., yes/no, true/false) by using a logistic function to model the relationship between the independent variables and the probability of the target variable. Logistic regression is widely used in areas such as fraud detection, email spam classification, and disease prediction.

While logistic regression is technically a linear model, it excels in classification problems due to its ability to estimate probabilities. For instance, in medical diagnostics, logistic regression can help predict whether a patient has a particular disease based on input features like age, blood pressure, and cholesterol levels.

When to Use Logistic Regression

Logistic regression is particularly useful when you need to classify data into distinct categories. Its simplicity and efficiency make it a great first-choice algorithm for binary classification problems. For example, a bank might use logistic regression to predict whether a loan applicant is likely to default or not.

Algorithm #3: Decision Trees

Decision trees are a popular machine learning algorithm used for both classification and regression tasks. They work by splitting the data into smaller subsets based on specific decision rules derived from the input features. The result is a tree-like structure where each leaf represents a classification or a regression output.

One of the main advantages of decision trees is their interpretability. Data scientists can easily visualize how decisions are made, making it a preferred choice in industries like finance, healthcare, and marketing.

Advantages and Limitations of Decision Trees

Decision trees are highly interpretable, require minimal data preparation, and can handle both numerical and categorical data. However, they can be prone to overfitting, especially when dealing with noisy data. To address this, techniques like pruning or using ensemble methods (e.g., random forests) are often employed.

Algorithm #4: Random Forest

Random forest is an ensemble learning technique that builds multiple decision trees and merges their outputs to produce a more accurate and stable prediction. This algorithm excels in both classification and regression tasks and is widely used in applications like recommendation systems, fraud detection, and medical diagnostics.

The power of random forest lies in its ability to reduce overfitting, a common issue in decision trees, by averaging the results of many trees. It provides higher accuracy and is less sensitive to noise, making it a go-to algorithm for many data scientists.

Applications of Random Forest in Industry

In e-commerce, random forest models are used to recommend products to customers based on their past behavior. In the medical field, random forests help identify patients at risk of developing certain conditions by analyzing large datasets of health records.

Algorithm #5: Support Vector Machines (SVM)

Support vector machines (SVM) are powerful algorithms for classification tasks, especially when dealing with high-dimensional data. SVM aims to find a hyperplane that best separates data points into different classes. It is particularly effective in cases where the data is not linearly separable.

SVMs are widely used in image recognition, text categorization, and bioinformatics. Their robustness and ability to handle complex datasets make them a favorite among data scientists tackling classification problems.

Kernel Tricks in SVM

One of the key strengths of SVM is its ability to use kernel functions. These kernels allow SVM to project data into a higher-dimensional space, where it can be linearly separable. This makes SVM versatile, as it can handle both linear and non-linear classification tasks.

Algorithm #6: K-Nearest Neighbors (KNN)

The K-nearest neighbors (KNN) algorithm is a simple yet powerful tool used for both classification and regression. It works by identifying the ‘k’ nearest data points to a given query and making a prediction based on the majority class (for classification) or average value (for regression).

KNN is highly intuitive and easy to implement but can become computationally expensive as the dataset grows. Despite this limitation, it is widely used in applications like recommendation engines, pattern recognition, and market segmentation.

Challenges in KNN

One of the main challenges with KNN is its sensitivity to the choice of ‘k’ and the distance metric used. Additionally, KNN is not suitable for large datasets because it requires calculating the distance between the query and every data point in the training set, which can be time-consuming.

Algorithm #7: K-Means Clustering

K-means clustering is an unsupervised learning algorithm used to group similar data points into clusters. It works by iteratively assigning data points to ‘k’ clusters based on their similarity, minimizing the distance between points within each cluster.

K-means clustering is commonly used in image segmentation, customer segmentation, and market analysis. It helps uncover hidden patterns in data that are not apparent through other algorithms.

Evaluating K-Means Clustering Effectiveness

To evaluate the performance of K-means clustering, data scientists often use metrics like the silhouette score or the elbow method. These metrics help determine the optimal number of clusters and assess how well the algorithm has grouped similar data points together.

Beyond the Basics: Deep Learning Algorithms

While this article focuses on traditional machine learning algorithms, it is worth mentioning that deep learning has gained prominence in recent years. Algorithms like neural networks, convolutional neural networks (CNNs), and recurrent neural networks (RNNs) have revolutionized fields such as computer vision and natural language processing.

How to Choose the Right Algorithm

Selecting the right algorithm depends on the nature of the data, the complexity of the problem, and the available computational resources. For example, if you are working with labeled data for classification, you might choose between logistic regression or SVM. For unsupervised learning tasks, algorithms like K-means or hierarchical clustering are more appropriate.

Tuning Machine Learning Models

To get the best performance from a machine learning model, it’s important to tune the hyperparameters. Techniques like grid search and random search can help you find the optimal settings for your algorithm, improving accuracy and reducing errors.

Interpreting Machine Learning Model Results

Once your model is trained, interpreting the results is just as important as building the model. Metrics like accuracy, precision, recall, and F1-score can provide valuable insights into how well your model is performing. In regression tasks, mean squared error (MSE) and R-squared are commonly used to measure performance.

Tools and Frameworks for Machine Learning

Several popular frameworks make implementing machine learning algorithms easier. Libraries like Scikit-learn, TensorFlow, and PyTorch provide pre-built implementations of most machine learning algorithms, saving you time and effort. These tools are widely adopted in the data science community and come with extensive documentation and support.

The Future of Machine Learning Algorithms

The field of machine learning is constantly evolving. New algorithms and techniques are being developed to handle even larger datasets, improve prediction accuracy, and make models more interpretable. As AI continues to advance, machine learning algorithms will play an even more crucial role in shaping the future of industries across the globe.

You Can Also Read : The Journey of AI in Fintech: From Data Processing to Financial Intelligence

Conclusion

In summary, these seven machine learning algorithms—linear regression, logistic regression, decision trees, random forest, SVM, KNN, and K-means clustering—form the foundation of data science. Understanding how and when to use these algorithms is essential for any data scientist looking to excel in the field. As technology continues to evolve, the importance of mastering these algorithms cannot be overstated.