The world of artificial intelligence has evolved rapidly over the past decade, with deep learning emerging as a central technology for solving complex problems like image recognition, natural language processing, and time series forecasting. TensorFlow, an open-source library developed by Google, has become one of the most popular frameworks for building and training deep learning models. In this article, we will guide you through how to train a deep learning model using TensorFlow, from preparing your data to fine-tuning and deploying your model.

Understanding Deep Learning

Before diving into TensorFlow, it’s essential to understand what a deep learning model is. At its core, deep learning is a subset of machine learning that uses artificial neural networks with multiple layers (hence the term “deep”) to model and learn from vast amounts of data. These models are designed to automatically discover patterns and insights from the input data by progressively abstracting high-level features at each layer. Unlike traditional algorithms, deep learning excels at handling unstructured data like images, text, and audio.

TensorFlow Overview

TensorFlow is a powerful open-source framework that allows developers to build and train deep learning models efficiently. Its ability to run computations on both CPUs and GPUs, and even on distributed systems, makes it a versatile tool for large-scale machine learning tasks. The framework supports various APIs, including low-level ones for building custom operations and high-level ones like Keras, which provides a more user-friendly interface for defining and training models.

The Key Components of TensorFlow

TensorFlow is built around several core concepts, including:

- Tensors: Multidimensional arrays that serve as the foundation for all computations.

- Graphs: Computational graphs that define the flow of operations and data.

- Sessions: An environment for executing graphs.

- Keras API: A higher-level API within TensorFlow for defining and training models quickly.

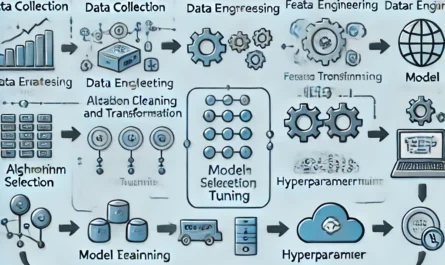

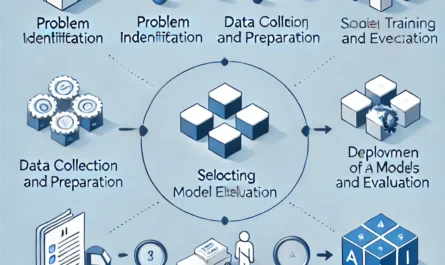

How to Train a Deep Learning Model Using TensorFlow

Preparing Data for Deep Learning with TensorFlow

Data is the fuel that powers any deep learning model. Before training a model, it’s crucial to organize and preprocess your dataset to ensure optimal performance.

- Dataset Splitting: Train, Validation, and Test Sets

Start by splitting your dataset into three distinct sets:- Training Set: The model learns from this data.

- Validation Set: Used to tune hyperparameters and evaluate the model’s performance during training.

- Test Set: The final evaluation happens here after training is complete.

- Data Preprocessing: Normalization and Augmentation

TensorFlow provides several tools for preprocessing data, such as scaling numerical values (normalization) or augmenting images to artificially increase the dataset’s size. For instance, when dealing with images, techniques like flipping, cropping, or rotating can help the model generalize better.

Building the Neural Network Architecture

When it comes to building a neural network, TensorFlow, with its Keras API, simplifies the process. The primary task is to define the number of layers, the types of layers, and how they’re connected.

Defining Layers and Neurons in TensorFlow

Layers in a neural network are composed of interconnected neurons. In TensorFlow, you can create dense layers (fully connected layers), convolutional layers for images, or recurrent layers for sequential data. The choice of architecture depends heavily on the nature of your problem.

The Role of Activation Functions

Activation functions introduce non-linearity into the network, allowing the model to capture complex patterns. Common activation functions include ReLU (Rectified Linear Unit) for hidden layers and softmax for output layers in classification tasks.

How to Select the Right Optimizer

Optimizers control how the model updates its weights based on the loss function’s output. Popular optimizers include:

- SGD (Stochastic Gradient Descent): Simple and effective for large-scale problems.

- Adam (Adaptive Moment Estimation): Combines the advantages of other optimizers like RMSprop and SGD, offering faster convergence.

Loss Functions in TensorFlow: MSE, Cross-Entropy

The loss function quantifies how well the model’s predictions match the actual labels. For regression tasks, Mean Squared Error (MSE) is commonly used, while Cross-Entropy is suitable for classification tasks.

Compiling the Model

Once the architecture is defined, the model needs to be compiled. In this step, you combine the loss function, optimizer, and evaluation metrics.

Training the Model

Now, the real magic happens—training the model. During training, the model learns by iteratively updating its weights based on the loss calculated for each batch of data.

Epochs and Batch Size Explained

- Epochs: One epoch is when the model has seen all the training data once. Typically, models require multiple epochs to learn effectively.

- Batch Size: Rather than processing the entire dataset at once, data is split into smaller batches. The model updates its weights after each batch.

Monitoring Training Progress with Callbacks

TensorFlow allows you to use callbacks like EarlyStopping to halt training when the model stops improving, or ModelCheckpoint to save the best version of the model during training.

Fine-Tuning a Deep Learning Model in TensorFlow

After initial training, you may want to fine-tune the model for improved performance.

Using Pre-trained Models and Transfer Learning

If you’re working with limited data, transfer learning can be a game-changer. TensorFlow offers several pre-trained models (such as VGG, ResNet) that you can fine-tune on your dataset.

Adjusting Learning Rates and Regularization Techniques

To prevent overfitting, adjust learning rates, or apply regularization techniques like L2 regularization or dropout layers.

You can also read; How to Train a Machine Learning Model from Scratch

Evaluation of Model Performance

Once the model is trained, it’s essential to evaluate its performance using various metrics.

Evaluating with TensorFlow: Accuracy, Precision, and Recall

Accuracy is a basic metric for classification tasks. However, in imbalanced datasets, metrics like precision, recall, and F1-score offer deeper insights into model performance.

Confusion Matrix and AUC

A confusion matrix helps visualize the model’s true positives, false positives, and negatives, while the Area Under the Curve (AUC) gives a sense of how well the model separates different classes.